Artificial intelligence is transforming financial markets and reshaping the UK financial services sector. The UK’s Financial Conduct Authority (FCA) and the Prudential Regulation Authority (PRA) are taking a stronger stance on how asset managers deploy AI technologies and algorithmic systems.

In 2025, firms’ use of AI in financial services will face closer scrutiny. The FCA’s focus includes AI guidance, algorithmic trading compliance, FCA algorithmic transparency, and AI in asset management regulation under the existing regulatory framework.

With AI in the UK and machine learning advancing rapidly, the FCA published its AI update, setting out its regulatory approach to AI. This year’s priorities are governance, transparency, and the safe adoption of AI tools within the financial services and markets act framework.

Algorithmic trading is faster and more complex than ever. The FCA aims to ensure AI innovation does not outpace safeguards. Keep reading to learn how to align your AI strategies with evolving FCA expectations and avoid compliance pitfalls.

The FCA’s Principles-Based, Adaptive Approach

The FCA has opted for a principles-based, pro-innovation approach to AI regulation in financial services. Rather than issuing overly prescriptive rules that could slow AI adoption, the regulator is embedding expectations into the existing regulatory framework such as:

- Senior Managers and Certification Regime (SM&CR)

- Consumer Duty

- Operational resilience rules

This approach allows the FCA and PRA to regulate AI while encouraging AI services and deployment of AI within the financial markets. It supports the UK government’s aim for a pro-innovation approach to AI regulation, ensuring firms using AI adopt responsible use of AI and maintain governance of AI models and systems.

For asset managers, this means AI in the financial services sector will be assessed not only on performance but also on the benefits of AI, transparency, and alignment with client outcomes. The regulatory approach to AI places emphasis on the impact of AI on market integrity, the financial stability implications of AI, and the governance of AI tools.

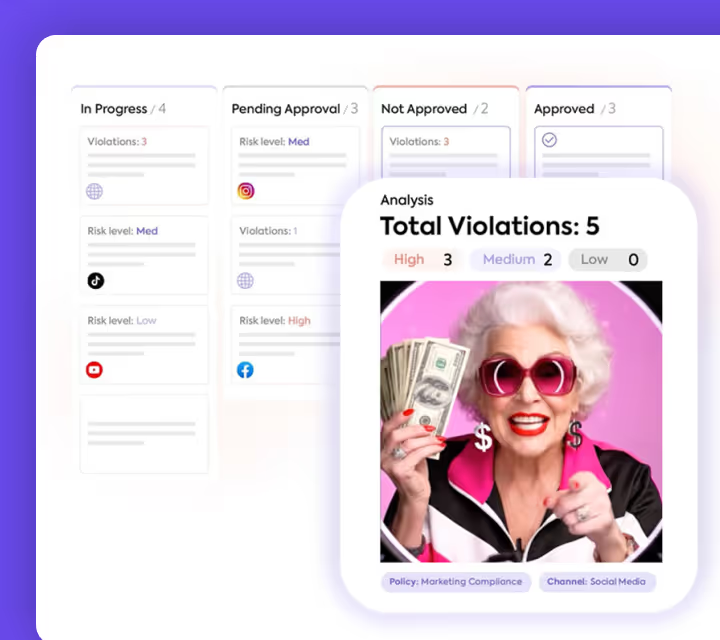

Stay ahead of FCA expectations with Sedric. Our platform helps financial services firms ensure AI governance, meet compliance standards, and deploy AI tools responsibly. Especially in a market where AI is already transforming operations and regulatory focus is growing around AI.

Key FCA Expectations for Algorithmic Systems Investment Strategies in 2025

In 2025, the FCA’s expectations for algorithmic strategies reflect the risks of AI, developments in AI and machine learning, and the need to regulate AI within the financial services sector.

Governance & Senior Accountability

Firms must demonstrate clear oversight of AI deployment by senior management under the SM&CR. Senior managers are expected to take direct responsibility for AI-powered strategies, ensuring systems and controls are robust. Governance of AI requires documented accountability structures, regular reviews, and adherence to the existing regulatory framework.

Risk Controls for Algorithmic Trading

The FCA continues to stress model risk management and operational risk management to promote market stability. Risk management to promote the safe adoption of AI involves pre- and post-trade risk checks, automated kill switches, and rigorous change controls. Firms should test AI deployment under varied market conditions to ensure AI could not create systemic risks.

Transparency, Explainability, & Contestability

AI governance requires that financial services firms can explain AI use cases, from generative AI models to predictive analytics. Clients must have clear channels to question AI decisions. This is key to the responsible use of AI and aligns with the FCA’s AI update on transparency.

Operational Resilience & Third-Party Oversight

With firms using AI increasingly relying on third parties, including cloud AI products, the FCA may require enhanced oversight of AI services providers. This includes contingency plans to ensure AI tools can operate independently of a third party in a disruption, aligning with the Financial Services and Markets Act 2023.

Pro-Innovation Support via FCA AI Initiatives

The FCA jointly published initiatives like AI sandboxes, live testing environments, and the AI Lab to help deploy AI while aligning with the regulatory approach to AI. This supports the adoption of generative AI and other AI technologies in a controlled environment.

Monitoring Systemic Risk & Market Stability

The FCA has published its stance that AI could affect the pace of AI innovation, but also bring new risks to financial stability. AI could introduce algorithmic monocultures, correlated trading patterns, or volatility spikes. Asset managers should diversify AI products, train AI models responsibly, and assess the impact of AI on market stability.

At Sedric, we provide AI to help firms monitor and manage AI-related risks effectively. Our expertise extends to identifying systemic vulnerabilities, strengthening model governance, and addressing key issues around AI to ensure you stay compliant and resilient in a rapidly changing market.

Strategic Considerations & Emerging Risks in 2025

The use of AI across the financial sector is expanding quickly, bringing both opportunities and risks. In 2025, firms must address these challenges strategically to protect clients, maintain compliance, and stay competitive.

Fast-Moving Technology: Judgement Over Rigid Rules

AI in UK financial markets is evolving quickly. The FCA’s approach will need to adapt, and firms must interpret guidance proactively. The UK financial services sector must navigate both the benefits of AI and the risks of AI, especially in cross-border regulation of AI.

Bias, Discrimination, and Exclusion Risks

Artificial intelligence and machine learning can unintentionally embed bias. Governance of AI within financial services requires regular monitoring of AI tools for discrimination risks.

Asset Tokenisation & Innovation

AI could help enhance asset tokenization, but any AI deployment must comply with the regulatory framework for AI in financial services.

International Coordination & Regulatory Divergence

AI regulation in financial services differs globally. Firms operating internationally must track developments in AI and AI regulation in the UK while understanding other jurisdictions’ approaches.

What This Means for Asset Managers

For firms using AI in financial services, compliance requires governance of AI, deployment and use of AI tools, and proactive AI adoption strategies. The FCA’s AI update reinforces that AI services in asset management must align with the responsible use of AI and the framework for AI regulation.

The most competitive financial services firms will see AI innovation as an opportunity, deploying AI use cases responsibly and within the existing regulatory framework.