The Financial Conduct Authority’s expectations of AI-driven decision-making are reshaping the compliance landscape. For firms that build with algorithms, the era of ‘black box’ logic is drawing to a close.

As FinTech firms and financial institutions continue their embrace of artificial intelligence—using models to underwrite loans, flag suspicious activity, or tailor customer journeys—the UK regulator is sharpening its gaze.

The Financial Conduct Authority (FCA), often seen as a steward of both innovation and integrity, is not just paying attention to how artificial intelligence is used. It is scrutinising the very assumptions behind it. In the context of the Consumer Duty, effective since July 2023, that scrutiny is no longer theoretical. It is regulatory.

The result? A subtle but decisive pivot in what “compliance” means in the age of automation.

From Principles to Parameters

The Consumer Duty articulates a clear expectation: that firms must deliver “good outcomes” for retail customers across four key areas—products and services, price and value, consumer understanding, and consumer support. Yet for AI-reliant firms, a more foundational question arises:

How can a firm guarantee a good outcome when it cannot fully explain the mechanism that produced it?

This is not just a philosophical dilemma—it is a practical one. Large language models and deep-learning credit scorers offer firms immense efficiencies, but their opacity threatens a cornerstone of FCA expectations: explainability. If a customer is declined for a credit product, misclassified during onboarding, or nudged toward an unsuitable investment—can the firm trace the logic behind that path?

“Firms must be able to evidence the fairness of their algorithms,” the FCA noted in its response to the AI Public-Private Forum. In other words, the burden of proof has shifted.

What the Duty Demands from Data Science

While the Consumer Duty makes no explicit mention of machine learning or artificial intelligence, its requirements have sharp implications for both:

- Explainability: Outcomes driven by AI must be intelligible not just to data scientists, but to compliance officers—and ultimately to consumers.

- Fair value: If pricing models personalise offers based on opaque correlations, they risk breaching the fairness principle if vulnerable customers are consistently disadvantaged.

- Support: AI-enhanced chatbots or decisioning systems must not impede access to human help—particularly for complex issues like fraud or financial hardship.

Thus, model governance becomes customer protection. And customer protection becomes regulatory risk.

The Human-AI Interface: Mind the Accountability Gap

One of the most vexing questions facing FCA-regulated firms is where responsibility lies when a machine acts autonomously but not necessarily wisely. Can a firm delegate decision-making without delegating accountability?

The regulator’s view is crisp: no.

Firms must maintain “appropriate human oversight of automated systems.” This aligns with guidance from the UK Government’s AI Regulation White Paper, which promotes accountability, safety, and transparency as foundational principles.

Designing for Duty: A Compliance-Aware AI Stack

The answer is not to retreat from AI, but to build with it responsibly.

Compliance-by-design is becoming a competitive differentiator in the FinTech sector. The most forward-thinking firms are embedding compliance logic directly into model training workflows. Examples include:

- Audit trails that record model versions and inputs at the point of decision

- Model cards that capture intent, limitations, and known biases

- “Fairness fire drills” that simulate unintended outcomes and trigger alerts

These are not mere artefacts—they are the architecture of operational resilience. When a complaint arrives, or a review begins, the firm can demonstrate that it did not merely comply with the rules, but that it understood them deeply.

This aligns with themes found in the FCA’s ongoing work on Digital Operational Resilience.

The Path Forward: Wisdom as a Feature

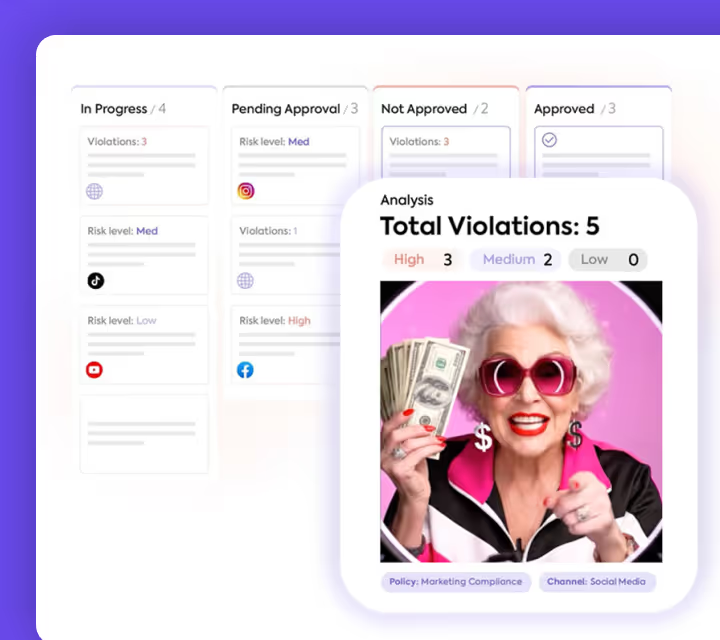

In many ways, the FCA is asking AI-enabled firms to be more human. To exercise judgment, not just automation. To design systems that are transparent, auditable, and ultimately oriented toward customer good—not just technical performance. That's why we built Sedric's AI to be auditable and explainable—to answer to the demanding needs of financial regulatory compliance.

As algorithmic decision-making becomes more embedded in financial journeys, the challenge will not be to eliminate risk, but to make it knowable.

Firms that meet this challenge will not only navigate the regulatory terrain more safely—they will earn a more durable form of trust. Not blind trust, but informed trust: trust with evidence, intention, and accountability.

That, after all, is the point of Consumer Duty. And it may become the north star of AI compliance in Britain.