In compliance circles, few acronyms inspire as much groaning as UDAAP—Unfair, Deceptive, or Abusive Acts or Practices. It’s the “catch-all” regulatory bucket that makes even seasoned compliance officers pause before approving that new marketing campaign, designing a product feature, or writing a customer communication.

Why? Because unlike highly prescriptive rules (think Reg Z or Reg E), UDAAP is broad, principle-driven, and constantly evolving. What looks like fair treatment today may be seen as deceptive tomorrow, depending on regulator guidance, market trends, and consumer expectations.

But here’s the good news: artificial intelligence—when applied thoughtfully—can help organizations spot, prevent, and even predict UDAAP risk. No, AI won’t replace compliance officers (sorry, Skynet), but it can serve as a powerful assistant in the ongoing mission to protect consumers and keep regulators off our backs.

Why UDAAP Keeps Leaders Up at Night

UDAAP risk is unique because it lives in the gray. Was that disclosure “clear and conspicuous,” or did it get buried in fine print? Did a pricing change create an unfair outcome for a vulnerable consumer segment? Did a chatbot give advice that was technically accurate but practically misleading?

Traditional compliance testing often finds these issues after the fact—when the product is live, the campaign has run, or the customer has already been harmed. By then, the remediation costs (not to mention reputational damage) are real.

Executives and boards increasingly want proactive solutions. Enter AI.

AI as a UDAAP Co-Pilot

Think of AI less as a replacement for judgment and more as a co-pilot that never sleeps. Properly trained, AI systems can:

- Scan communications at scale: Instead of relying on manual reviews of hundreds of marketing emails or app notifications, AI can flag phrases that might be misleading or confusing. Think of it as spellcheck—but for consumer protection.

- Spot patterns humans might miss: By analyzing millions of transactions, AI can highlight pricing or fee structures that disproportionately affect certain groups. What looks like a minor glitch to the human eye may be systemic unfairness at scale.

- Stress test product changes: Before a new feature rolls out, AI models can simulate consumer interactions and flag areas where disclosures may be misunderstood or where outcomes may skew negative.

- Translate regulatory language into operational guardrails: AI can convert broad expectations like “avoid abusive practices” into concrete watchlists of risky practices, disclosures, or product features.

But Let’s Be Clear: AI Isn’t a Magic Wand

Here’s the catch: AI itself can introduce UDAAP risk if used carelessly. Imagine an AI-powered chatbot giving “personalized financial advice” that leads a consumer into higher fees. Or an algorithm that unintentionally steers vulnerable customers toward costlier products.

That’s why governance is everything. Using AI to manage UDAAP means building a framework that includes:

- Human in the loop: AI highlights risks, but humans make the judgment calls.

- Bias monitoring: Models must be regularly tested to ensure they don’t replicate or amplify unfair patterns.

- Auditability: Regulators will want to know not just what the AI flagged, but why. Transparent, explainable outputs are critical.

- Continuous learning: As regulatory expectations shift, AI systems must be updated with new training data and rules of the road.

Real-World Applications That Work

Forward-leaning organizations are already experimenting with AI in compliance, and the early wins are encouraging:

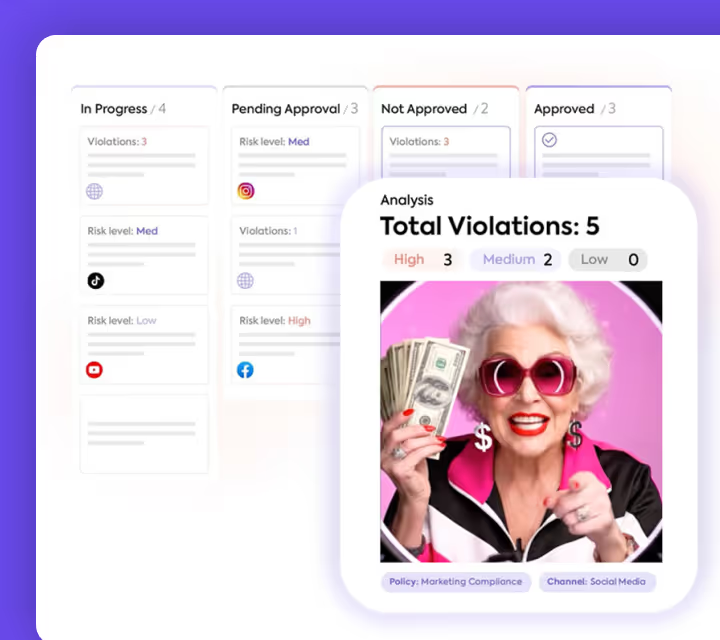

- Marketing content review: AI systems pre-screen promotional copy for risky terms before legal ever sees it, reducing bottlenecks and freeing counsel to focus on higher-risk areas.

- Complaint analysis: AI digs into thousands of consumer complaints to identify trends that suggest potential unfairness. Instead of anecdotal “we heard a few customers say…,” leaders get data-driven insight.

- Transaction monitoring with a UDAAP lens: Beyond AML/BSA, AI can flag unusual consumer outcomes—like recurring fees that hit low-balance customers disproportionately.

Why This Matters for Leadership

For leaders, UDAAP isn’t just a compliance issue—it’s a trust issue. Consumers who feel mistreated don’t just file complaints; they leave, they post on social media, and they influence regulators’ priorities.

AI provides an opportunity to shift from reactive compliance to proactive consumer protection. Instead of asking, “Did we break the rule?” leaders can ask, “Would this feel fair if I were the customer?” AI helps scale that empathy across millions of interactions.

A Balanced Path Forward

Here’s the mindset I encourage: treat AI like an enthusiastic new analyst on your compliance team. It’s fast, it’s eager, it’s great at sifting through mountains of data—but it still needs oversight, training, and guidance.

Use it to expand your reach, sharpen your focus, and give your human experts more time to apply judgment where it counts. With the right balance, AI becomes not just a cost-saver, but a trust-builder.

Closing Thoughts

UDAAP isn’t going away. If anything, the bar for what regulators and consumers consider “fair” will only rise. Leaders who harness AI to get ahead of that curve won’t just reduce risk—they’ll create competitive advantage.

Because at the end of the day, compliance isn’t just about avoiding fines. It’s about building products and experiences that consumers believe in. And when algorithms meet acronyms, that’s where real trust can be won.